What is GigaVoxels / GigaSpace ?

Index

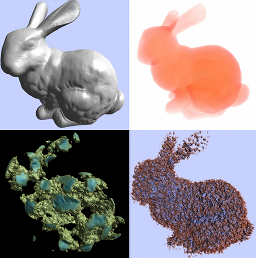

GigaVoxels

GigaVoxels is an open library made for GPU-based real-time quality rendering of very detailed and wide objects and scenes (encoded as SVO - Sparse Voxels Octree - but not necessarily fuzzy or transparent: see History). It can easily be mixed with ordinary OpenGL objects and scenes.Its secret lies in lazy evaluation: chunks of data (i.e., bricks) are loaded or generated only once proven necessary for the image and only at necessary resolution. Then, they are kept in a LRU cache on GPU for next frames. Thus, hidden data have no impact at all for management, storage and rendering. Similarly, high details have no impact on cost and aliasing if they are smaller than pixel size.

GigaVoxels allows to do easily the simple things, and also permits a progressive exposure of the engine depending of the customization you need. It is provided with plenty of examples to illustrate possibilities and variants, and to ease your tests and prototyping by copy-pasting.

- In the most basic usage, you are already happy with what does one of the examples (good for you: nothing to code !)

- The most basic programming usage consists in writing the callback producer function that the GigaVoxels renderer will call at the last moment to obtain data for a given cube of space at a given resolution. This function can have one part on CPU side and one part on GPU side. You might simply want to transfer data from CPU to GPU, or possibly to unpack or amplify it on GPU side. You might also generate it procedurally (e.g. volume Perlin noise), or convert the requested chunk of data[1] from another shape structure already present in GPU memory (e.g., a VBO mesh).

- You probably also want to add a user-defined voxel shader to tune the appearance (the view-dep BRDF, but probably also the material color rather than storing it in voxels) or even tweak the data on the fly. In facts, it is up to you to chose what should be generated (or pre-stored) on CPU, in the GPU producer, or on the fly in the voxel shader, depending on your preferred balance between storage, performances and needs of immediate change. E.g., if you want to let a user play with a color LUT or a density transfer function, or tune hypertexture parameters, this should be done in the voxel shader for immediate feedback - otherwise all the already produced bricks should be cancelled. But once these parameters are fixed, rendering will be faster if data is all-cooked in the producer and kept ready-to-use in cache.

- You might want to treat non-RGB values (multispectral, X-rays...), or not on a simple way (polarization, HDR ). GigaVoxels let you easily define the typing of voxel data. You can also customize pre and post-rendering tasks (e.g. to mix with a OpenGL scene), various early tests, hints, and flag for preferred behaviors (e.g., creation priority strategy in strict time-budget applications).

- The basic GigaVoxels relies on octree dynamic partitioning of space, which nodes corresponds to voxel bricks - if space is not empty there. But indeed, you can prefer another space partitioning scheme, such as N^3-tree, k-d tree, or even BSP-tree. You can also prefer to store something else than voxels in the local data chunk, e.g., triangles, points, procedural parameters, internal data easing the update or subdivision to avoid a costly rebuilding from scratch...

GigaSpace

GigaSpace is an open GPU-based library for the efficient data management of huge data. It consists of a set of 4 components, all customizable:- A multi-scale space-partitioning dynamic tree structure,

- A cache-manager storing constant-size data chunks corresponding to non-empty nodes of the space partition.

- A visitor function marching the data (indeed, three: a space-partitioning visitor, a chunk visitor, a point data accessor)

- A data producer called when missing data are encountered by the visitor. Datatypes can be anything of your choice as long as chunk are fix size; 'space' can represents any variety as long as you know how to partition and visit it.

May be you got it: GigaVoxels and GigaSpace are one and same thing. GigaSpace is just the generalized way of seeing the tool. The basic use of GigaVoxels use octrees as tree, voxels bricks as data chunk, and volume cone-tracing[2] as visitor, so this naming is prefered for the SVO community. But we provide many other examples showing other choices, since the potential use is a lot larger than SVOs.

Cuda vs OpenGL vs GLSL

- The GigaVoxels/GigaSpace data management is (now) a CUDA library - it used to be on CPU in our early stages. - The renderer is provided in both CUDA and GLSL. So, as long as you don't need to produce new bricks, you can render gigaVoxels data totally in OpenGL without swapping to Cuda if you wish, for instance as pixel shaders binded to a mesh. - Still, using the Cuda renderer - embedding the dynamic data management -, GigaVoxels allows various modalities of interoperability with OpenGL:- Zbuffer integration: You can do a first pass with OpenGL then call GigaVoxels providing the OpenGL color and depth buffer (and possibly, shadow-maps, light-maps and env-maps) so that fragments of both sources are correctly integrated. Similarly, GigaVoxels can return its color and depth buffers to OpenGL, and so on with possibly other loops. Note that hidden voxels won't render at all: interoperability keeps our gold rule "pay only for what you really see".

- Volume clipping: It is easy to tell GigaVoxels to render only behind or in front of a depth-buffer, which makes interactive volume clipping trivial for volume visualization.

- Volumetric objects instances and volumetric material inside meshes: To let OpenGL display cloned transformed instances of a given volume (e.g., to render a forest from a volumetric tree), or display shapes carved into some openwork 3D material, you can use an openGL bounding-box or a proxy-geometry, use their object-view matrix to rotate the volume view-angle accordingly, possibly provide a mask of visible fragments, and tell GigaVoxels to render only from the front depth and (optionally) stopping before the rear depth.

- Auxiliary volumes for improved rendering effects; ray-tracing as second bounce: GigaVoxels is basically a ray-tracer so it can easily deal with reflexion, refraction, and fancy cameras such as fish-eyes. Moreover, as a cone-tracer it is especially good at blurry rendering and soft-shadows. Why not using it only to improve some bits of your classical OpenGL scene-graph rendering ? Binding GigaVoxels to a classical GLSL surface shader, you can easily launch the reflected, refracted or shadow rays in gigaVoxels within a (probably crude) voxelized version of the scene. Real-time global illumination was achieved in this spirit in the GI-Voxels work.

History and roots of GigaVoxels

GigaVoxels draws on:- The idea that voxel ray-tracing is a very efficient and compact way to manage highly detailed surfaces (not necessarily fuzzy or transparent), as early shown in real-time by the game industry reference John Carmack (Id Software) with Sparse Voxel Octrees (SVO), and for high quality rendering by Jim Kajiya with Volumetric Textures, first dedicated to furry Teddy bears, then generalized by Fabrice Neyret

- The idea of differential cone-tracing which allows for zero-cost antialiasing on volume data by relying on 3D MIP-mapping.

- On the technology of smart texture management determining visible parts on the fly then streaming or generating the data at the necessary resolution. Starting with SGI clipmaps and late variants for very large textured terrains in flight simulators, then generalized as a cache of multiscale texture tiles feeded by on demand production, by Sylvain Lefebvre and al. .

- Cyril Crassin connected all these ideas together during his PhD to found the basis of the GigaVoxels system.

- Note that Proland, the quality real-time all-scale Earth renderer (also from our team), is based on the same spirit for its multi-scale 2D chunks of terrain.

Footnotes

- The basic use of GigaVoxels considers voxel grids since it is especially efficient to render complex data on a screen-oriented strategy: data are visited directly and in depth order without having to consider or sort any other data (they come naturally sorted), while OpenGL - which is scene-oriented - has to visit and screen-project all existing elements, an especially inefficient strategy for highly complex scenes. LOD and antialiasing is just a piece of cake using voxels (neighborhood is explicit), and soft-shadows as well as depth-of-field are easy to obtain - and indeed, they are faster than sharp images ! The implicit sorting permits the deferred loading or creation of the chunk that the GigaVoxels cache will keep for next frames. So, your producer might as complex as an iterative algorithm or even a local voxelizer of a large VBO - BTW, we do provide one.

- Volume cone-tracing is basically volume ray-marching with 3D MIPmapping.